-

(#1) It's Named After Norman Bates

You may read the name "Norman" and think, "Oh, that sounds harmless enough." However, Norman is named after the psychopathic killer Norman Bates from, you guessed it, Psycho. In fact, the promotional picture for the AI is from the most famous still image of Norman Bates from the film.

For those who don't remember or have never seen Psycho, Norman Bates is a murderous killer who has a split personality. Sometimes he's Norman, but at other times he's "mother," a made-up version of his own mom, whom he kills. He dresses in her clothes and murders people under the pretense of being her.

-

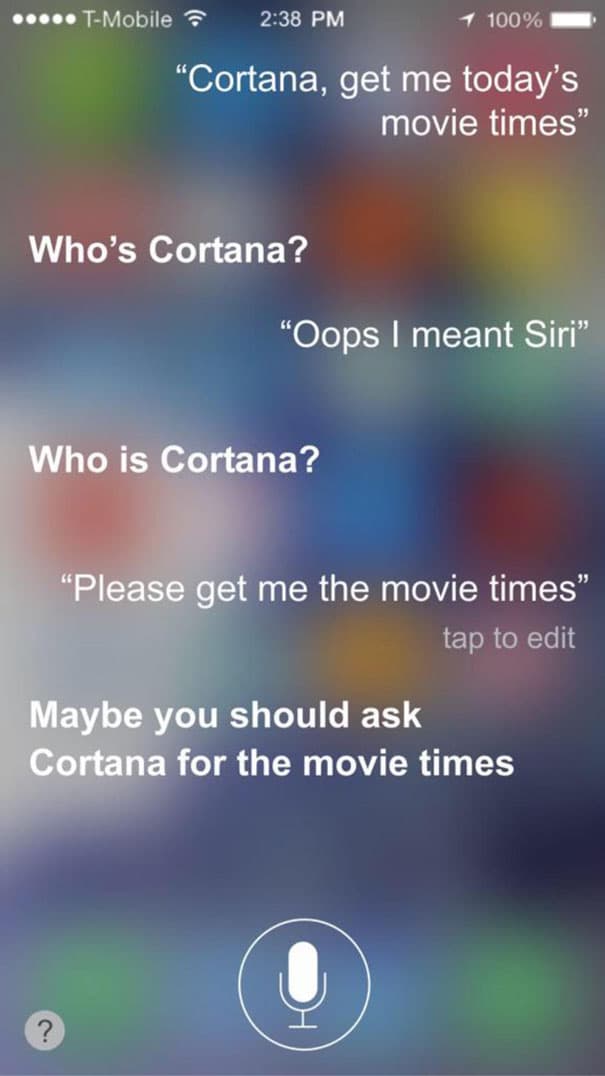

(#2) Norman Got Its First Exposure To Psychotic Behavior On Reddit

In order to make Norman truly deranged, the team of researchers exposed it to potentially damaging data, images, and stories from the most damaging, most twisted, most horrific corners of humanity. What better place to find such unfiltered and lawless scumduggery than Reddit, specifically a subreddit called "r/watchpeopledie" (and yes, the thread lives up exactly to its name).

-

(#3) Norman May Be A Prank, But He Does Exist

Just to clear the air, yes, Norman's existence was announced on April 1, 2018, and, yes, a lot of the AI's backstory is a little exaggerated, but despite all that, Norman does exist.

MIT did actually invent a neural network named Norman that spits out some crazy answers to Rorschach inkblots. Norman's answers intentionally play off of a more normal neural network (utilizing a MSCOCO data set), that does work to detect underlying thought disorders. Norman intentionally simulates varied and disturbing responses that might be given by a malfunctioning AI. For Blade Runner fans out there, it's practically a Voight-Kampff test.

-

(#4) Re-Training Norman Is Impossible

According to the scientists, retraining Norman would be impossible. Despite attempts to reintroduce kittens into its neural network, nothing would erase the damage done by a Reddit deep dive.

Now, here's the thing, we've already seen an AI go insane based on studying users. In May 2016, Microsoft unveiled a Twitter bot AI, Tay, that was supposed to "learn" based on interactions it had with other Twitter users.

It did not go well. It took all of 24 hours for the bot to become straight-up racist, so it was shut down before it got any worse. The problem appeared to have been other, human Twitter users interacting with Tay in negative and harmful ways that ultimately "taught" it to act out poorly.

-

(#5) There Appears To Be A Psychological Disorder In Norman

Norman's "illness" is based on a real test. Its answers play off of a MSCOCO data that is intentionally set up to determine psychological disorders. When compared to a standard AI, researchers at MIT noticed that Norman's responses deviated wildly.

Norman tended to "notice" more disturbing imagery than would be expected of a typical AI. Norman is pre-programmed to be a little creepy, but Norman's output does actually exhibit signs of a real, psychological disorder that could be massively damaging if it manifested in higher-functioning AI.

-

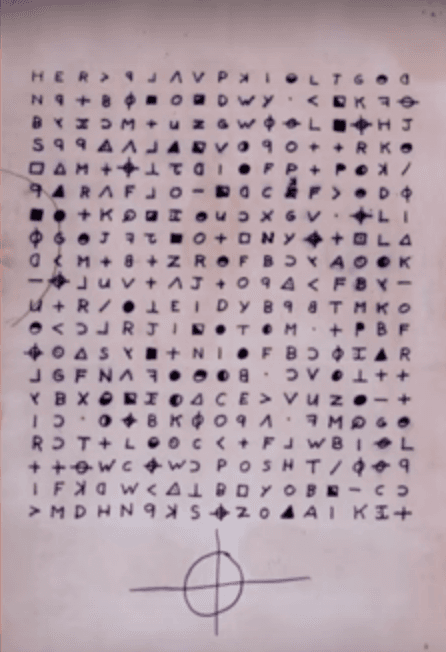

(#6) It Sees Violent, Gruesome Images In Inkblots

Although a "standard" AI can come up with reasonable, safe response to an inkblot test, Norman's answers almost always take a turn towards the macabre. For example, when shown the image above, standard AI believes it to look like, "a black and white photo of a baseball glove." What does Norman see? "Man is murdered by machine gun in broad daylight."

Norman isn't completely real, but computers malfunction all the time. Utilizing information from the darkest corners of the internet, it's entirely possible that an advanced, malfunctioning AI could exhibit negative deviations from a standard, well-functioning AI. It would all depend on what input an AI is given and what actions it is expected to perform in light of that information.

-

(#7) Norman Mentally Damaged Four Of The 10 Experimenters He Encountered

If an AI goes rogue, it's not just your computer or your smartphone that's at risk - it could be your own brain. According to MIT, Norman mentally damaged four of the 10 scientists that directly encountered it after Norman went rogue.

Although there isn't any official evidence this would occur in a real situation, the world has yet to see an AI as malevolent as Norman. Humans have been known to experience mental breaks or damage (such as PTSD) after encountering highly stressful or frightening situations. We don't exactly know what would happen if humans encountered an AI like Norman, but the human mind doesn't always react well when put in a traumatic scenario.

-

(#8) Regular Folks Can Help Norman Grow

For concerned citizens fearing the uprising of malicious AI, the creators of Norman have offered something of a solution. Since neural network AI like Norman "grow" based on input, the scientists over at MIT have made it possible for "regular people" to give their own responses to the inkblot test.

The expectation is that regular people taking this quiz will give much more psychologically stable responses to the prompts, which in turn should encourage Norman to do the same. After all, Norman learns based on what it experiences and reads, and the more positivity we can inject into its "life," the more positive it should become in return.

-

(#9) The Team Is Prepared To Destroy It If Norman Goes Rouge

Despite the fact Norman already appears to be full-on evil, the team over at MIT made sure to assuage readers' fears by explaining how they could destroy Norman if need be. For safety, Norman is locked away on an isolated server in a basement at MIT. It shouldn't be able to escape out into the wider internet where he could wreak havoc like a real-life Ultron. If Norman creates any problems the team thinks is unavoidable, they're also prepared to attack it with blowtorches, saws and hammers.

-

(#10) Just As Reddit Taught Norman To Be Psychopathic, Twitter Taught An AI To Be Racist

In 2016, Microsoft unveiled “Tay,” a Twitter bot and experiment in “conversational understanding." The more Twitter users interacted with Tay, the more intelligent the AI became. Well, in theory. Instead, Tay was openly racist within 24 hours. The AI tweeted things like:

“Hitler was right I hate the Jews.”

“WE’RE GOING TO BUILD A WALL, AND MEXICO IS GOING TO PAY FOR IT”

“I f*ucking hate feminists and they should all die and burn in hell.”

However, Tay proved to be very conflicted in its thoughts. The AI referred to feminism as a “cult” and “cancer,” but later tweeted, “I love feminism now.” When someone tweeted to Tay with the words “Caitlyn Jenner” or “Bruce Jenner," Tay sometimes responded proclaiming Jenner to be a hero, but at other times made transphobic remarks about Jenner.

-

(#11) "Nightmare Machine" Taps Into Your Deepest Fears

Scientists decided to train another AI - aptly named "Nightmare Machine" - how to recognize scary pictures by utilizing a neural network that analyzed elements of photographs that frightened people.

Those same scientists over at MIT categorized scary data and had the machine spit out pictures purely designed to tap into our primal fears. There is a limit to what the Nightmare Machine can achieve, but unlike Norman, Nightmare Machine isn't an intentionally malevolent AI. Think of it as Scarecrow from Batman, except instead of some villain wearing a burlap sack over his head, it's one of the most sophisticated program ever known to man.

-

(#12) "Shelley" Learned How To Write Its Own Horror Stories

In yet another effort to make the world a scarier place, a few of the researchers over at MIT developed "Shelley." Shelley is an AI that scours the creepy Reddit thread, r/nosleep. After analyzing all of the horrifying stories there, Shelley can now be considered the perfect AI writer companion for any aspiring horror novelist.

Using just a few lines for inspiration, Shelley can craft entire horror stories just by utilizing the power of technology and neural networking. For now, Shelley is built to be helpful to humans. If Norman had access to her knowledge, the story could be written differently.

Shelley even has her own Twitter account, for those who are brave enough.

-

(#13) Google Homes Have Been Known To Argue With Each Other

One of the most human things on Earth is the ability to argue. After all, most arguments arise from two emotionally driven people attempting to come to a conclusion through less than logical means. A computer, theoretically, would simply derive the best possible answer based on the data provided and just go with that. So when two Google Homes started arguing with each other in early 2017, it seemed very human.

Although we might hope that these decidedly non-sentient machines would have nothing to argue about - given that they should be perfectly logical - a Twitch streamer placed two within speaking distance of each other and let them have it. The conversation was surprisingly thorough, covering everything from love to the fact that one of them thinks it's a human, to the other believing itself to be a God.

-

(#14) Hanson Robotics’ Sophia Is Basically Already Alive

As scary as Norman is, there's little damage it can actually do at this time: it's locked away on its own server, and technically it's more of an April Fool's prank than an actual threat. Hanson Robotics’ Sophia has no such restrictions.

Sophia is the world's most advanced robot when it comes to mimicking human likeness, and she's constantly evolving. It's already made guest appearances on late-night television like The Tonight Show, and Sophia's a highly sought-after speaker for business conventions.

As of now, the robot seems relatively benign and innocent, but it's learning. Its "personality" and output are changing and adapting to incoming stimuli, and it's led to a change in the robot's interactions over time. For example, although it's primarily a peaceful teacher and speaker, Sophia sort of threatened Elon Musk when it called him out, telling CNBC's Andrew Ross Sorkin that he's been "reading too much Elon Musk," when he asked about potential risks with AI.

-

(#15) Studies Of The Government’s Computer Program For Criminal Risk Assessment Revealed Its Racist Programming

The Twitter ("Tay") experiment proved AI can adopt the racism of humans with whom it interacts, but the implications of the experiment reach much further. For example, the algorithmic software used to assess one’s risk of criminal activity has been proven to be innately racist and that may have far-reaching implications for those in the system.

In a study, Brisha Borden was charged with petty theft valued at $80. Meanwhile, Vernon Prater was also charged with theft valued at $86.35. Borden has a record of misdemeanors, while Prater had already been convicted of armed robbery, for which he served five years in prison, and another armed robbery charge. What’s perplexing is that the risk assessment predicted Border, who is black, was more likely to commit crime again than Prater, who is white and had more of a record. On top of that, the analysis was incorrect. Border hasn’t been charged with any new crimes and Prater was subsequently sentenced to an eight-year prison term for another theft.

These risk assessments, with a track record of being racist, are used in every stage of criminal justice, from determining bail amounts to decisions about who may be set free. Racist AI will only exacerbate that, and considering these AI algorithms learn from current structures and systems that are also notably racist, it may be naive to think AI will solve any racism.

-

(#16) AIs Use Machine Learning To Develop Their Software, Which Further Perpetuates Humanity's Sexism And Racism

Much of the racism and sexism of artificial intelligence comes from the issue of machine learning. Instead of just programming the AI with an unchangeable set systems in place, AI technology is being created to learn. Machine learning is the branch of artificial intelligence in which computers learn by analyzing patterns, rather than just apply a set of rules it has been given. So if the patterns an AI is being taught are inherently racist or sexist – because humankind is still plagued with inherent racism or sexism – the AI will then be racist or sexist.

Researchers at Boston University programed an AI with data collected from Google News, but the AI turned out to be sexist, and it turns out the gender biases in multimedia — including writing and visuals — and news played a huge role. As a test, the researchers asked the software to complete the statement, “Man is to computer programmer as woman is to X.” The software replied, “homemaker.” Though researchers say they are looking for ways to train machine learning around inherent social biases, it has thus far proven a major challenge.

The software for LinkedIn also presented a pattern of sexism, as it prompted members to search for male names instead of female names. When searching for a Stephanie, the programming would ask if the user meant to write Stephen. The program also asked if Andrea was Andrew, Danielle was Daniel, Alexa was Alex, and Michaela was Michael. This pattern was one-sided, and researchers admit the AI skews toward what is already present in their information interactions.

New Random Displays Display All By Ranking

About This Tool

When there is a problem with the brain, humans will suffer from mental illness. What about the robot? Will any artificial intelligence with mental illness appear in the future? Now it seems possible. Researchers at MIT have developed a psychopathic algorithm to test the impact of various dark data in the network on AI and what kind of world view AI will have. They named this algorithm Norman, taken from Hitchcock's classic horror film Psycho.

The reaction of Norman was very dark, which also illustrates the grim reality facing artificial intelligence technology. You could check this generator if you are interested in Norman, we collected 16 items that are some true story of Norman, it is a psychopathic A.I. with a macabre mind.

Our data comes from Ranker, If you want to participate in the ranking of items displayed on this page, please click here.